Jeffrey Wenstrup, Ph.D., Sharad Shanbhag, Ph.D., and Mahtab Tehrani, Ph.D. (NEOMED)

Vocalizations reflect our emotions. As we listen to another’s vocal signals, we respond by assigning them a meaning determined by the vocalization itself, our previous experience, and our own emotional state. This in turn affects the way that we respond to a person’s vocal signals—by our posture, facial gestures, movement, and speech. Our goal is to understand how the brain shapes our responses to these vocal signals.

Our models We use bats and mice as models to study these processes. Bats are sound experts that use vocalizations to both communicate and to catch prey and navigate through echolocation. Mice are acoustic generalists that integrate acoustic and other sensory information during social interactions. Both models provide valuable insights into the mechanisms underlying acoustic communication and emotions.

Current Project

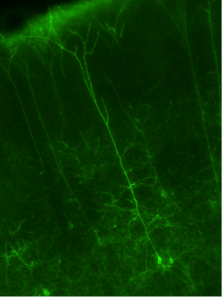

We have a large data set of recordings of the neural responses in the amygdala to vocalizations. Analysis of these data uses custom MATLAB programs that we have developed in the lab. We are looking for an undergraduate with programming experience to aid in developing new visualization and analysis code for this data set. The student would preferably have experience programming in MATLAB, but experience in other programming languages (Python, C) is also acceptable. The student would be expected to work with the existing MATLAB code and develop new code in MATLAB. Experience in using git and Github is also desirable.